Welcome aboard the AI Express, where the next stop is the revolutionary world of prompt chaining.

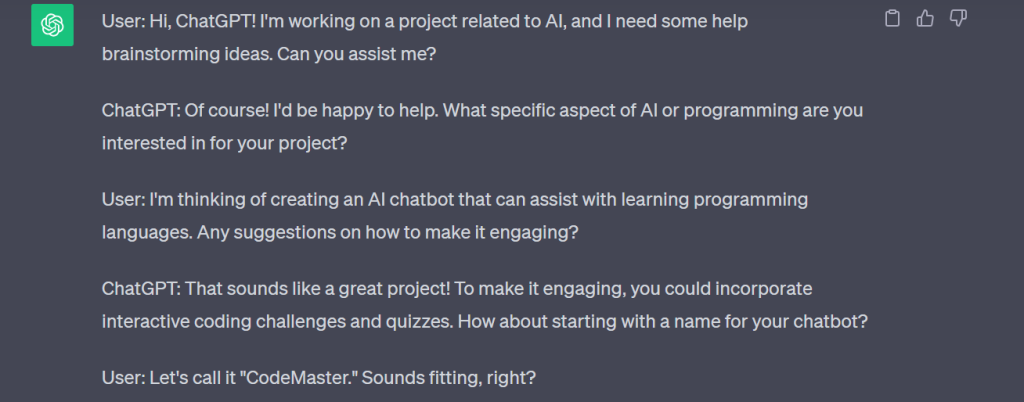

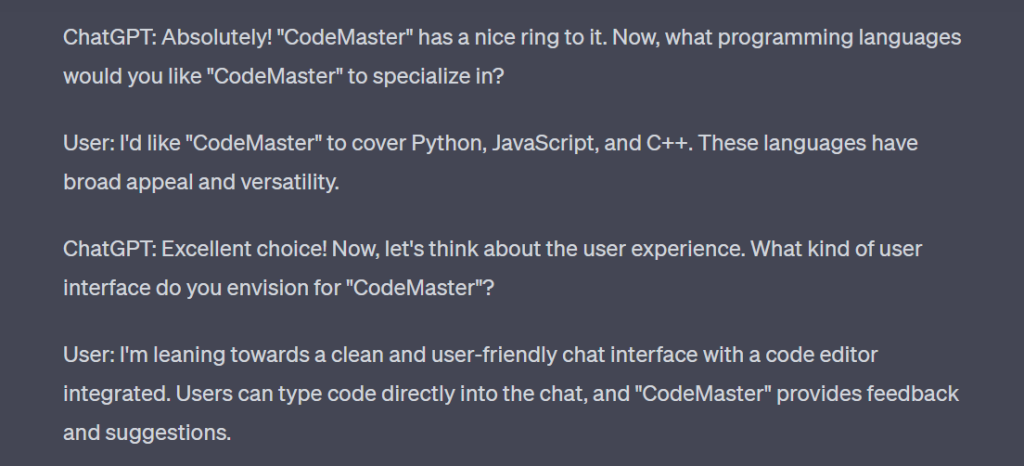

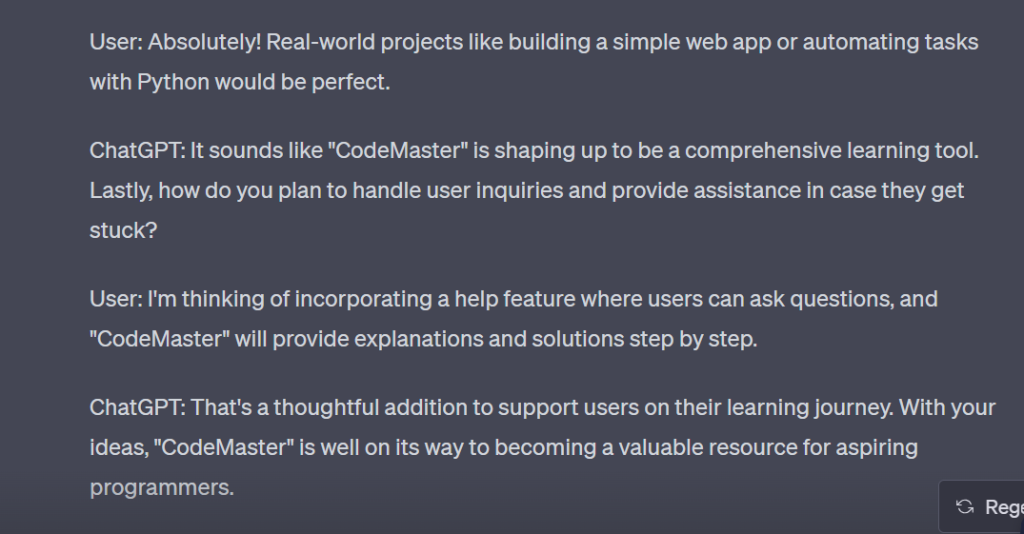

Prompt chaining is a technique that’s transforming how we interact with AI. In essence, it takes the output of one prompt and uses it as the input for the next, creating a continuous chain of interactions. Its applicability is vast, ranging from drafting emails and generating content ideas to advanced tasks such as web scraping and Python scripting.

A critical aspect of prompt chaining is its application in Large Language Models (LLMs). By enhancing the reasoning capabilities of LLMs, it generates more sophisticated and nuanced responses. The power of this method lies in its ability to break down complex tasks into manageable sub-steps, initiating a chain reaction of responses that lead the AI towards the desired outcome.

In this article, we provide an insightful and up-to-date overview of prompt chaining for the command model.

Large Language Model Chaining

LLM chaining is a pivotal technique in the realm of AI. It’s all about logically connecting one or more large language models, creating what can be best described as ‘chains‘. This process hinges on three key aspects: training, operation, and utility.

Chain-of-Thought (CoT) prompting is a standout feature of LLM chaining. It amplifies the reasoning capabilities of large language models, thereby enabling them to generate more sophisticated responses.

The true power of LLM chaining lies in its ability to break down complex tasks into smaller, manageable steps. Each step can be completed by an independent run of an LLM, providing a transparent and controllable human-AI interaction.

However, LLM chaining isn’t without its challenges; it requires careful scaffolding for transforming intermediate node outputs and thorough debugging. Yet, with the right approach and understanding, LLM chaining opens a whole new dimension in AI development.

Step-by-Step Guide: Constructing Prompts for the Command Model

Ready to dive into the realm of prompt chaining? Here’s a comprehensive, step-by-step guide to help you master the art and science of constructing prompts for the command model:

Define your objective

The first step involves identifying the end goal. What do you want the AI to do? Be as specific as possible, whether it’s generating a blog post, writing code, or answering questions.

Decompose the task

Break down the overall task into smaller, manageable sub-tasks. Each sub-task should correspond to a step that the AI can accomplish.

Craft the prompts

For each sub-task, design a clear and precise prompt. A well-crafted prompt guides the AI towards the desired output.

Are you looking for job opportunities with top U.S. companies? Check out our open positions here.

Test the prompts

Run each prompt individually to ensure it generates the expected result. This step is crucial for troubleshooting before you start the chaining process.

Chain the prompts

Begin with the initial prompt and use its output as the input for the subsequent prompt. Repeat this process until all prompts have been chained.

Evaluate and refine

Assess the final output against your original objective. If it doesn’t meet your expectations, tweak the prompts and repeat the process.

Remember, practice makes perfect. Prompt chaining might seem complex at first, but with perseverance and iterative refinement, you’ll be able to harness the power of this innovative technique to optimize AI interactions.

Examples of Constructing Prompts for the Command Model

Here, we explore some practical examples to illustrate the process of prompt chaining in action:

Example 1: Writing a blog post

- Define Your Objective: Write a blog post about “The Benefits of Meditation.”

- Decompose the Task: Break it down into sub-tasks:

- Generate a catchy title

- Outline the main points

- Write the introduction

- Elaborate on each point

- Conclude the post

- Craft the Prompts: Create a prompt for each sub-task:

- “Generate a catchy title for a blog post on meditation benefits.”

- “Outline five main benefits of meditation.”

- “Write an engaging introduction for a blog post on meditation.”

- “Elaborate on the first benefit of meditation.”

- “Summarize the blog post and conclude with a call-to-action.”

- Test the Prompts: Run each prompt individually and adjust as needed.

- Chain the Prompts: Connect the output of one prompt to the next one.

- Evaluate and Refine: Check the final blog post and make necessary modifications.

Example 2: Coding a simple calculator

- Define Your Objective: Code a simple calculator in Python.

- Decompose the Task: Divide it into sub-tasks:

- Set up the basic structure

- Add addition function

- Add subtraction function

- Add multiplication function

- Add division function

- Craft the Prompts: Formulate a prompt for each sub-task:

- “Write the basic structure for a calculator in Python.”

- “Add a function for addition in the calculator.”

- “Add a function for subtraction in the calculator.”

- “Add a function for multiplication in the calculator.”

- “Add a function for division in the calculator.”

- Test the Prompts: Ensure each prompt generates the correct code snippet.

- Chain the Prompts: Link the output of one prompt to the next until the calculator code is complete.

- Evaluate and Refine: Check the final code, run it, and make any necessary adjustments.

Exploring Common Prompt Chain Patterns

Prompt chaining is a potent instrument for AI that allows it to accomplish complex tasks by breaking them down into smaller, more manageable phases. Here, we explore some common patterns in prompt chaining:

Chain-of-thought prompting

This method breaks down a larger task into sub-tasks and chains them together. It’s intuitive and mimics the way humans approach problem-solving.

Zero-shot prompts

These prompts describe exactly what needs to happen in a task. They’re direct and straightforward, making them useful for tasks requiring specific outcomes.

Few-shot prompts

These prompts provide a few examples of the desired output before asking the AI to generate its own. This helps guide the AI toward the intended result.

Self-consistency and ReAct techniques

These advanced prompt engineering techniques are designed to enhance the AI’s reasoning abilities.

Clear syntax use

A clear syntax in your prompts ensures easy communication with language models and makes outputs easier to parse.

The Role of Visual Programming in Chaining Prompts

Visual programming plays a pivotal role in chaining prompts, enhancing the user experience, and making the process more intuitive.

Here’s how:

- Ease of Use: Visual programming allows users to chain prompts by dragging and dropping elements, making it accessible even to those without extensive coding knowledge.

- Real-Time Visualization: It provides a graphical representation of the prompt chain, enabling users to see the flow of information and the relationship between different prompts.

- Error Detection: By visually mapping out the prompt chain, it becomes easier to spot errors or bottlenecks, improving the quality of the final output.

- Collaboration: Visual programming tools often come with collaboration features, allowing multiple users to work together on the same prompt chain.

- Scalability: They provide a scalable solution for managing complex chains of prompts, making it easier to add, modify, or remove prompts as needed.

In-depth Analysis: The || Syntax in Chaining Prompts

The double pipe (||) syntax is a powerful tool in chaining prompts, allowing for more dynamic and flexible interactions with AI models.

Here’s why:

Separation of prompts

The || symbol is a separator between different prompts in a chain, making it easier to structure and manage multiple prompts.

Contextual continuity

By using ||, you’re able to maintain the context from one prompt to the next. This enables the AI model to understand the relationship between prompts and generate more coherent responses.

Flexibility

The || syntax allows for flexibility in chaining prompts. You can easily add, remove, or rearrange prompts in a chain, making the process more adaptable to changing requirements.

Efficiency

Using || can reduce the length of your code and make it easier to read and debug, leading to more efficient programming practices.

In sum, the || syntax is an important aspect of prompt chaining that enhances the readability, efficiency, and effectiveness of your interactions with AI models.

Conclusion

As we’ve explored throughout this article, prompt chaining is a powerful technique that’s shaping the future of generative AI applications. From understanding common patterns and the role of visual programming to mastering the || syntax, we’ve seen how these elements come together to create more effective, intuitive, and efficient AI interactions.

The art of prompt chaining is continually evolving, offering new possibilities for enhancing AI models’ problem-solving capabilities. As we move forward, these techniques will become increasingly significant in harnessing the full potential of AI, from simplifying complex tasks to generating creative content.

At Inclusion Cloud, we’re committed to helping you navigate this exciting landscape. Make sure to contact us, our team of experts is here to assist you in optimizing your prompting strategies, ensuring that your AI applications are performing at their best and delivering tangible results for your company.