AI isn’t coming for our jobs, but it can take other things. While this technology has introduced new solutions and innovations for many businesses, it also presents new threats. Although there is still debate over whether these threats are fundamentally different from existing cybersecurity risks, some initiatives are working to define and categorize emerging AI security risks.

But what exactly are these threats? How do they differ from regular cyberattacks? And, more importantly, how can they be prevented? In today’s article, we’ll explore the cybersecurity landscape of AI systems to help you integrate them into your business workflows safely.

Are AI Security Threats the Same as Those of Regular Applications?

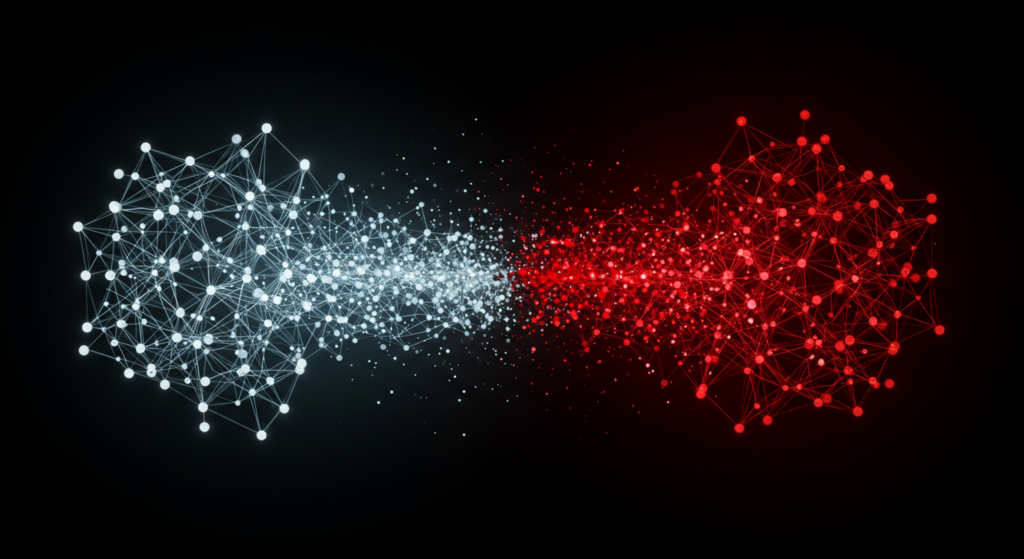

It’s true that some AI threats are not a novelty. For example, data poisoning an ML’s training data is not too different from any other regular app. However, AI systems require specialized defenses because of three basic facts:

- New attack surfaces: AI models can be manipulated, extracted or saturated with attacks such as Distributed Denial of Service DDoS.

- AI-Driven Threats: Attackers can use AI to automate cyberattacks, increasing scale and efficiency.

- Explainability Issues: Unlike traditional apps, AI’s “black box” nature makes threats harder to detect.

What Are the Most Common AI Security Risks?

As the World Economic Forum has pointed out, the idea of AI as an asset that needs to be protected is relatively new. This may be because the technology is still in its early stages of adoption. But, in any case, we can already identify some specific threats for AI systems. In the following sections, we will examine each of these AI security risks in detail.

1. Denial of Service (DoS) & Distributed Denial of Service (DDoS)

Both Denial of Service (DoS) and Distributed Denial of Service (DDoS) attacks are AI security risks that consist in flooding a system with excessive requests, overwhelming its resources and making it unavailable. However, while DoS attacks usually come from a single source, DDoS threats are more advanced, using multiple compromised devices (botnets) to launch large-scale assaults, making them harder to block.

For AI systems, both kinds of attacks can disrupt model training, overload APIs, or cripple AI-driven services. Since AI relies on constant data flow, a successful DoS or DDoS attack can halt real-time decision-making, degrade performance, or even cause model failures. Robust security measures are crucial to mitigate these threats.

2. Adversarial Attacks

Adversarial attacks consist in manipulating AI models by subtly altering input data to deceive their decision-making. These attacks exploit AI vulnerabilities, causing misclassifications or incorrect predictions. Unlike data poisoning, they target already-trained models in real time.

These AI security risks can lead to critical failures—for example, self-driving cars misidentifying stop signs or fraud detection models missing threats. As AI adoption grows, strengthening models against these attacks with robust training, anomaly detection, and adversarial defense techniques will be essential to maintaining security and reliability.

3. Data poisoning

Data poisoning is one of the most common AI security risks and, as it names signals, consists in corrupting an AI model by injecting malicious data during training, leading to biased, inaccurate, or harmful outputs. Unlike adversarial attacks, it alters the model’s foundation, making errors harder to detect.

This can cause misinformation, security vulnerabilities, or ethical concerns. For example, attackers may subtly bias AI decisions or degrade performance, impacting fraud detection, medical diagnoses, or automated security systems. Regular data audits and robust validation are essential defenses.

4. Prompt Injection

Prompt Injection attacks are AI security risks that exploit the model’s natural language processing capabilities. They manipulate AI models by injecting deceptive or malicious inputs to override instructions. These attacks basically trick AI into generating unauthorized, biased, or harmful outputs.

This threat affects chatbots, automated customer support, and AI-driven code generation tools, potentially leaking sensitive data, spreading misinformation, or executing harmful commands. Besides, preventing these attacks requires strict input validation, user access controls, and fine-tuning AI models to resist manipulation while maintaining accuracy and reliability.

5. Supply Chain Attacks

Supply chain attacks target vulnerabilities in third-party components—software, datasets, or hardware—used to build AI systems, embedding malware or manipulating models. So, unlike direct attacks on AI itself, supply chain attacks exploit trusted sources, making them harder to detect.

This way, these AI security risks affect pretrained models, APIs, cloud services, and hardware accelerators, leading to data breaches, model corruption, or unauthorized access. However, it is possible to prevent them through rigorous vetting of third-party providers, cryptographic verification, and continuous monitoring.

6. Model Inversion

Model inversion reconstructs sensitive training data by analyzing an AI model’s outputs, potentially exposing personal or proprietary information. They consist in replicating an AI model by repeatedly querying it to recreate its functionality without access to its original architecture.

Unlike adversarial attacks, these AI security risks focus on unauthorized access and intellectual property theft. They affect ML APIs, cloud-based AI services, and proprietary models, leading to data leaks, loss of competitive advantage, and compliance violations.

The Infrastructure of an AI Security Operations Center (SOC)

So, let’s dive into the technical side of preventing AI security risks. Just like with regular applications, any organization looking to prevent AI security risks needs to consider a Security Operations Center (SOC) tailored to its specific context.

Naturally, the setup will vary from business to business, but a strong AI SOC should include the following key components:

| Component | Description | Relevant Tools & Platforms |

| AI Model Security & Access Control | Ensures AI models and data are protected with role-based access, encryption, and monitoring. | Azure Machine Learning Security Controls |

| AWS IAM for AI Services | ||

| SAP AI Core Security | ||

| Oracle Cloud AI Access Control | ||

| ServiceNow IAM Integrations | ||

| Adversarial Attack Detection | Detects and mitigates attacks like data poisoning, model evasion, and adversarial inputs. | Microsoft Counterfit (AI Red Teaming) |

| AWS Macie (Data Protection for AI Models) | ||

| IBM Adversarial Robustness Toolbox | ||

| AI Data Integrity & Governance | Ensures data used for AI training is accurate, unbiased, and free from tampering. | ServiceNow AI Governance |

| SAP Data Intelligence | ||

| Model Explainability & Bias Detection | AI-driven monitoring for bias, fairness, and model transparency to meet compliance standards. | SAP AI Ethics Guidelines |

| Oracle AI Explainability Framework | ||

| AWS SageMaker Clarify | ||

| Secure AI Development Lifecycle (MLOps Security) | Implements secure coding, testing, and deployment of AI models with CI/CD security. | ServiceNow DevSecOps |

| AWS SageMaker Model Monitor | ||

| AI API Security & Monitoring | Protects AI/ML APIs against unauthorized access, data leakage, and API abuse. | AWS API Gateway + WAF |

| Azure API Management Security | ||

| SAP API Management | ||

| Oracle API Gateway Security | ||

| AI Supply Chain Security | Secures third-party AI models, datasets, and dependencies from vulnerabilities. | ServiceNow Third-Party Risk Management (TPRM) |

| AWS AI Supply Chain Integrity | ||

| Model Watermarking & IP Protection | Embeds identifiers in AI models to prevent theft and ensure intellectual property security. | Microsoft AI Watermarking Research |

| AWS Digital Rights Management (DRM) for AI | ||

| Oracle AI Model Fingerprinting | ||

| Compliance & Regulatory Monitoring for AI | Ensures AI models meet industry standards like GDPR, HIPAA, and NIST AI RMF. | ServiceNow AI Governance & Compliance |

| SAP Risk Management | ||

| Azure AI Compliance Center | ||

| AWS Audit Manager for AI |

The anatomy of an AI cybersecurity team

Now that we understand the SOC infrastructure for an AI system, we need to discuss the team responsible for managing it. Naturally, the composition will vary depending on an organization’s size, needs, and available resources. However, the Inclusion Cloud team has summarized the key roles of an AI cybersecurity team in the following table:

| Role | Description | Key Knowledge | Technical Skills |

| AI Security Lead | Oversees AI security strategy and ensures compliance. | Model risk management | ML security |

| Threat modeling in AI | Secure AI frameworks | ||

| Regulatory compliance (GDPR, AI Act) | Incident management | ||

| ML Security Engineer | Implements defenses against attacks and ensures data privacy. | Adversarial attacks | TensorFlow/PyTorch |

| Differential privacy | Cryptography applied to ML | ||

| Data poisoning | Secure MLOps | ||

| AI Red Team | Simulates attacks to find vulnerabilities in AI models. | Reverse engineering of models | Python |

| Adversarial ML | AI pentesting tools (e.g. Adversarial Robustness Toolbox) | ||

| AI Security Analyst | Monitors for security incidents and ensures compliance. | Log analysis | Security Information and Event Management (SIEM) |

| Anomaly detection in AI models | Adversarial attack detection in production | ||

| Compliance & Risk Officer | Assesses risks, ensures compliance, and audits for bias. | AI and privacy regulations | ML risk assessment |

| Bias and explainability audits | Data governance |

Making AI Security Accessible

So, as we’ve seen, AI systems not only need an entire infrastructure to work, but also to protect themselves from the multiple AI security risks. However, these cybersecurity frameworks can be costly, often requiring enterprise-level budgets that mid-sized companies may not have.

This leave thousands of organizations with an impossible choice: limit AI adoption due to security concerns, remaining behind in an increasingly competitive and rapidly evolving market, or move forward without proper safeguards, increasing exposure to attacks.

But here is where the Inclusion Cloud team can help you. We can provide you with both consulting and skilled talent to set the foundations for your business AI adoption ensuring that your systems remain compliant, resilient, and effective. Let’s connect and leverage your business AI systems without compromising your security!

And don’t forget to follow us on LinkedIn for more upcoming AI insights and other industry trends!

Other resources

AI Trends 2025: Let’s Take a Hike to AI Maturity

What Is SaaS Sprawl? Causes, Challenges, and Solutions

IT Strategy Plan 2025: The Ultimate Tech Roadmap for CIOs

Is Shadow IT Helping You Innovate—Or Inviting Risks You Don’t Need?

The API-First Strategic Approach: 101 Guide for CIOs

What Are Multiagent Systems? The Future of AI in 2025

Event-Driven Architecture: Transforming IT Resilience

Service-Oriented Architecture: A Necessity In 2025?

Why to Choose Hybrid Integration Platforms?

Sources

World Economic Forum – Artificial Intelligence and Cybersecurity: Balancing Risks and Rewards

AXIS – Road to AI Maturity: The CIO’s Strategic Guide for 2025